BitNet RPi 4B Setup Guide

Pre-Requisites

This install guide has been tested on a Raspberry Pi 4B 2GB ram, using the Raspberry Pi OS (64-bit) Released 2024-11-19

1. Install system tools

sudo apt update && sudo apt install -y \

python3-pip python3-dev cmake build-essential \

git software-properties-common

2. Install Clang 18 (and lld)

wget -O - https://apt.llvm.org/llvm.sh | sudo bash -s 18

3. Create & activate a Python venv

python3 -m venv bitnet-env

source bitnet-env/bin/activate

4. Clone BitNet and install Python deps

git clone --recursive https://github.com/microsoft/BitNet.git

cd BitNet

pip install -r requirements.txt

5. Generate the LUT kernels header & config

Runs the code-generation script to produce include/bitnet-lut-kernels.h and include/kernel_config.ini. These files define the specialized, pre-tiled lookup tables for the 1.58-bit quantization kernels, and CMake needs them to compile the BitNet "mad" routines.

Without this step, CMake will fail with "Cannot find source file include/bitnet-lut-kernels.h." The numbers passed to --BM, --BK, and --bm control the tile sizes for your ARM implementation.

python utils/codegen_tl1.py \

--model bitnet_b1_58-3B \

--BM 160,320,320 \

--BK 64,128,64 \

--bm 32,64,32

6. Build with Clang (full -O3, auto-vectorization enabled)

export CC=clang-18 CXX=clang++-18

rm -rf build && mkdir build && cd build

cmake .. -DCMAKE_BUILD_TYPE=Release

make -j$(nproc)

cd ..

7. Download the quantized model

huggingface-cli download microsoft/BitNet-b1.58-2B-4T-gguf \

--local-dir models/BitNet-b1.58-2B-4T

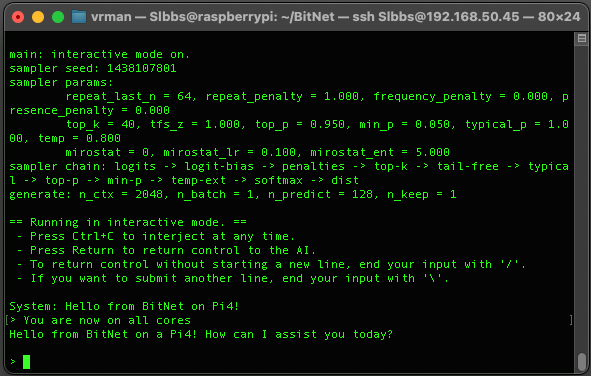

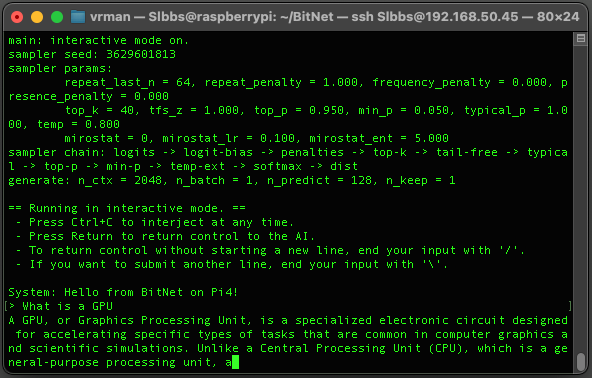

8. Quick interactive test

Launches interactive chat mode to verify that the library, model, and Python binding are all wired up correctly.

python run_inference.py \

-m models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf \

-p "Hello from BitNet on Pi4!" -cnv

9. Full-core inference

Maximizes throughput on your Pi 4B by spawning four threads and setting a 2 K-token context window.

-t 4 uses all four Pi 4B cores in parallel.

-c 2048 allows up to a 2048-token context length.

python run_inference.py \

-m models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf \

-p "Hello from BitNet on Pi4!" \

-cnv -t 4 -c 2048

Now we can enjoy a fast LLM on our Pi!